tl;dr: I put a simple electronic kit for the Raspberry Pi on Kickstarter, it didn't succeed. P&P made it look expensive, and I set my target too high. I'm wiser for the experience.

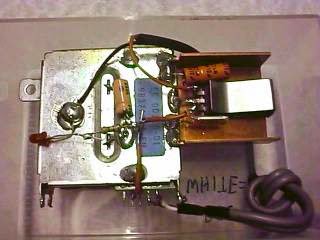

Earlier this year I was using a general purpose prototyping board for the Raspberry Pi as little more than the receptacle for a BNC socket and passive filter to use the Pi's onboard clock generator as an RF source. It wasn't very pretty, with bits of copper wire soldered tot he earth lugs of the BNC socket, and most of the board was unused.

So I had an idea: why not make a dedicated RF breakout board for the Pi, with just enough breadboarding space for a filter, and a BNC socket on board. No rocket science involved, but a handy little niche product. I'd put it on Kickstarter, and have a success on my hands.

A

Dirty PCBs order and a 3 week wait for China Post, and I had my boards fresh from China. Their "Protopack" order promises "10+-", in other words a bit more than ten if the process goes well and a bit less if it goes badly. My $10 got me 12 very nicely made boards, which for a prototype service is nothing short of astounding. Buoyed by this and a bag of connectors, I set to work on a set of prototypes and was soon using one of my Pi boards as a WSPR beacon.

All was good. Suppliers sorted, good social media reaction, let's put it on Kickstarter as a kit.

I'm no stranger to product fulfilment. I thus wasn't surprised to read lots of Kickstarter advice about pricing

everything to the last fraction of a penny. Horror stories of KS projects who'd not accounted for postage and packing abounded, and I wasn't going to be one of them. So I did my homework, and created an exhaustive spreadsheet of all I'd need, down to the last piece of stationery.

In a quick list, I considered the following:

- The kit components

- A click-to-seal plastic bag for the kit

- Two pages of printed A4 instructions

- A sticky label for the kit

- A Language Spy sticker

- Two different packaging options: small cardboard box versus bag

- Padded envelope

- Dispatch note/invoice/address sticker stationery

- Different postage options: letter versus parcel, and prices for the different Royal Mail international regions

- Kickstarter's cut

- Between 5 and 10% failed delivery, lost in the post etc.

I priced each of the above in three columns: for 100 kits, 500, and then 1000 kits, and added the VAT as I'm not VAT registered. I made my decisions on packaging and postage: bag not box, and letter not parcel postage. I then put together a sample envelope with all the things I'd be sending and took it to my local post office to check weight and postage rate.

My gut feeling price for the components was about £2.50. As it turned out, that wasn't too far wrong for the 100 quantity, and it dropped below £2 for the higher quantities. The BNC connector was the most expensive component.

The sundries added up to more than I expected. When you are used to printing single sheets without a second thought it's a shock when you tot up the cost of a thousand two-page double sided instruction leaflets.

The biggest cost of them all was postage. Down the street: just under a pound, to Europe, somewhere under £2, USA just over £3, Australia well over £3. I took a decision at this point to charge one flat reward rate. I know Kickstarter have an option for different shipping rates, but previous experience with online shops has taught me that no matter how well you explain and calculate shipping you will always get some customers who manage to put their address in on the other side of the world and select UK shipping. I want to make kits, not argue with people thousands of miles away who can't read.

So taking into account component prices, all the sundries, packing, postage, the Kickstarter cut, and a percentage for lost in transit, I came up with a £9 reward price. On Australian orders I would make not a lot, on US orders a bit more, and on UK orders a bit more than that. I expected most of my backers to be Americans, and this turned out to be the case.

Looking at comparable Pi dev boards, without a BNC they cost somewhere under £5. I would have expected a hypothetical retail version of this kit, probably in the cardboard box packaging and with a percentage for the retailer, to sell somewhere around £6. Let's call it $10, for Americans. Then an online retailer would add about £3.50 P&P. So I wasn't too far from the mark.

If all of the above seems a little too much detail I make no apologies. It's very important to account for everything to turn a gut price for what you think the components might cost into what the finished item is going to cost. Get it wrong, and it'll hurt.

So

on to Kickstarter it went. I set the time for four weeks, and the target for 200 kits. This seemed not too optimistic a target, nor was it a huge amount of money. I put in a early bird offer with a pound off, and away it went.

This was my first Kickstarter campaign. I took as my models the campaigns I had previously backed that I thought had done a good job, and set out to add value, to be responsive to backers, and to publicise without being irritating at it. My social media friends did a sterling job of helping me, I worked on a set of software enhancements for using the Pi as an RF generator, and I wrote a set of updates detailing all the cool things I'd done with the board.

Along the way I had quite a few messages. Three groups: backers, non-backers, and spam from people offering miracle marketing cures to guarantee me a Kickstarter staff pick. The latter I ignored, the former I engaged with. No brickbats on the whole, one or two suggestions for enhancements, a few queries about the price to whom I explained the postage costs and the cost of the same on comparable boards.

Progress started off well. For the first ten days if you'd drawn a ruler along the line I'd have been funded at the three week mark. I was expecting it to tail off into the second week as it dropped from visibility though, and it duly did. A few backers through weeks two and three, then a fresh spate of "rescue your failing project" spam from marketeers. In week four I got a healthy uptick in backers, but sadly it wasn't to be. It was somewhere just shy of 75% when the campaign expired, so no cheque for me to make kits for my backers and no metaphorical cigar.

It's disappointing when something like this doesn't come off. But it's important to retain perspective. My future didn't depend on this project, its development cost me very little and it wouldn't have earned me a huge amount had it succeeded. So it's no biggie, and it was a very interesting process to go through.

So... Where did it go wrong and what would I do differently next time?

There are two areas which I think contributed to the failure of this campaign.

- I set the sales quantity for success too high.

- Postage and packing inflated the price the user saw.

In the first area, there was no reason why I could not have set the bar at 100 kits, and if I had done the project would have succeeded. Pure optimism on my part. If I do another one I'll set the bar at the minimum level to give it that magic high percentage when it comes into visibility again at the end of the time period.

In the second area, there wasn't a lot I could have done to change the reward price. And consumers who are used to making the purchase decision on the item page without seeing the P&P at the checkout were always going to see the reward as a bit steep. If I have earned one thing from this process it is that items costing under a tenner are disproportionately hit by postage costs, if I do another campaign it'll be for a more expensive item.

So there we are. I put up a Kickstarter campaign, it got quite a few backers, but not enough. And I'm still standing. I think the RF board still has a future, though I'm not sure I'll relaunch it in the same place. Watch this space, it could be coming to a website near you.